Irfan Muzaffar

In developing nations, including Pakistan, Education Management Information Systems (EMISs) are primarily used for reporting rather than driving improvement in the education sector. As a result, schools, which are crucial sources of information and action, remain unseen in the aggregated statistics presented in EMIS reports. This policy challenge is prevalent in multiple countries, including those partnered with the Global Partnership for Education (GPE), especially in the Europe, Asia and Pacific (EAP) region.

Consider the National Education Management Information System (NEMIS) of Pakistan. The aim of NEMIS is to optimize the management of information and data. To achieve this goal, NEMIS focuses on enhancing the quality and accuracy of the data, streamlining the data collection and processing procedures, and strengthening the management of information flow within and outside the organization. These efforts are expected to result in the production of up-to-date and reliable reports, including the integrated report of the annual school censuses conducted in each of the constituent units of Pakistan. While these objectives are laudable, they do not fully leverage the potential of a management information system. To truly realize the benefits of this system, there must be a focus on using it to drive educational improvement at the level of schools.

Achieving school-level improvements using data necessitates the reconceptualization of data collection, integration, and utilization. This blog provides a concise examination of the key attributes of EMIS-based school improvement initiatives and discusses the School Improvement Framework (SIF) recently instituted by the School Education Department (SED), Government of Punjab (GoPb) as a valuable illustration of the operation of EMIS-based school improvement initiatives at scale.

School Improvement Framework (SIF): The Case of Punjab

The emergence of Large-scale Monitoring System, its potential, and sub-optimal use

EMISs have been in use in Pakistan since the early 1990s. The primary purpose of these systems is to provide a statistical snapshot of the state of education in the country through annual reports that include information on the number and type of institutions, as well as various participation indicators such as Gross Enrolment Rate (GER), Net Enrolment Rate (NER), Survival and Transition rates, and Adult Literacy. However, as mentioned above, despite the wealth of information contained in these reports, there is little evidence that the data collected by EMIS is being used to improve the quality of schools in the country.

Starting around 2005, significant changes were made to the way data was collected from schools, providing the foundation for using EMIS for school improvement. The Government of Punjab, with support from development partners such as the World Bank, introduced a Large-scale Monitoring System (LSMS) under the oversight of the Programme Management and Implementation Unit (PMIU). The PMIU hired over 900 monitors to collect data from 60 schools each month on a set of predetermined indicators. By 2012-13, a similar LSMS was established in Khyber Pakhtunkhwa (KP) under the name of Independent Monitoring Unit (IMU) with support from the UK Department for International Development (DFID). The IMU was later renamed the Education Monitoring Authority (EMA). In 2015, the Sindh School Monitoring System was established to implement LSMS in Sindh. The LSMSs in Punjab, KP, and Sindh gathered monthly information on student and teacher involvement and the school environment. However, even with the frequency and accuracy of data collection, these systems still did not fully realize their potential in accurately identifying and addressing the particular needs of each individual school.

Here is why.

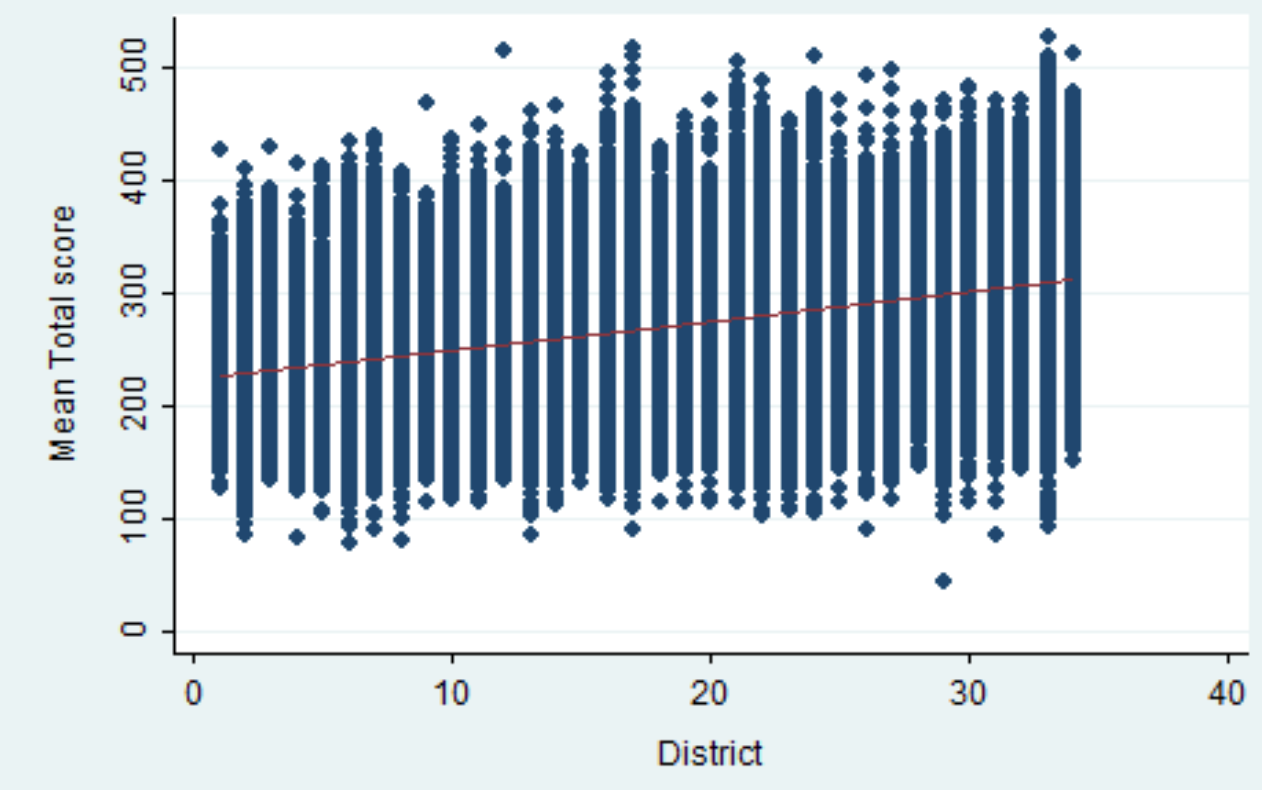

The current method of organizing the data collected by LSMS has a major flaw: it obscures individual schools by ranking them at the district and sub-district level and making broad comparisons. This results in a loss of visibility of the specific needs of individual schools, as they are buried under the weight of district and sub-district rankings and broader comparisons. The intra-district variation between the schools, as shown in the following chart, is significant but is completely obscured by higher level aggregates.

Intra-district variation of schools’ performance in a given district

Note: The straight, red line depicts the mean score of the district. The relative flatness of the line connecting the districts’ average scores is in stark contrast to the considerable range of performance within each district.

As a result, despite the LSMS producing reports that serve the interests of higher-level stakeholders, it does not provide in-depth information about individual schools, depriving them of the ability to assess their own performance and outcomes. To address this, it was necessary to implement a new, innovative system that would allow for a systematic evaluation of each school. This would provide a solid foundation for targeted actions by stakeholders at various levels, including the school, sub-district, district, and province.

The fundamental characteristics of a Data-Driven School Improvement (DSI) Innovation

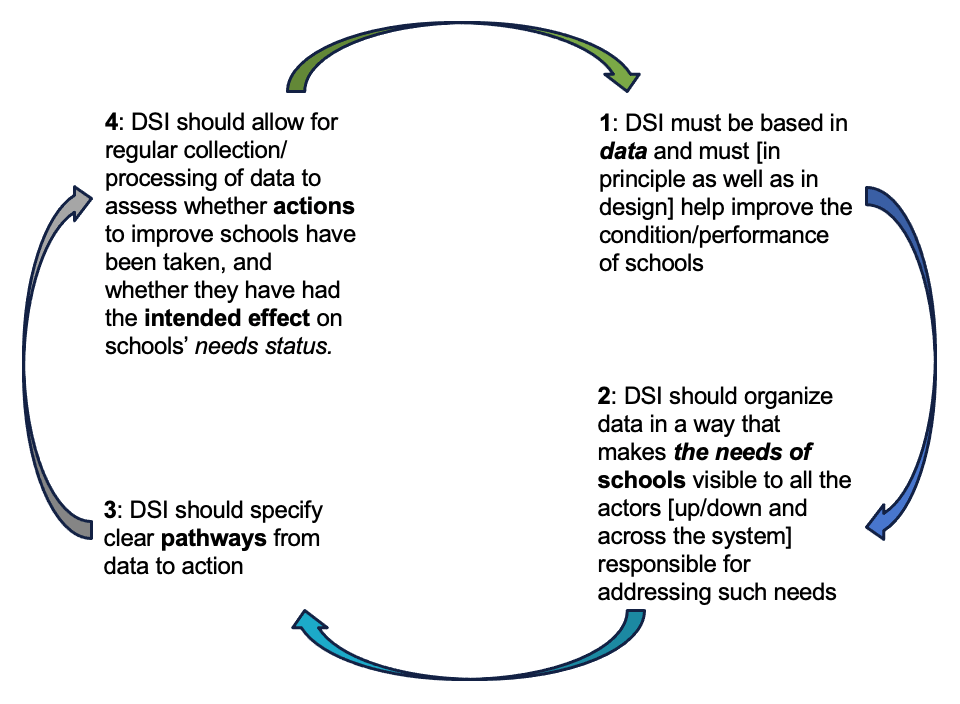

Innovation in public administration involves transforming the organization of government elements (such as LSMS), by identifying problems, creating new processes, and implementing innovative solutions to address existing issues. The issue of not being able to effectively identify and respond to individual schools’ needs prompted the development of a new process for organizing data to address this issue. The new process, whose characteristics are illustrated below, was based on a school-focused approach which is necessary to properly organize, interpret, and integrate data from LSMS to effectively highlight the needs of individual schools and support efforts to address those needs. Generically speaking, we call this process, Data-Driven School Improvement (DSI).

DSI, as the name implies, must be based in data, organized such that it makes the needs of each school visible to those responsible for addressing them. Regular data collection and processing are essential to evaluate the impact of implemented actions on each school’s needs and determine their effectiveness. This approach is indispensable in taking the necessary steps towards ongoing improvement of schools.

The characteristics of DSI

The SIF as an instance of DSI

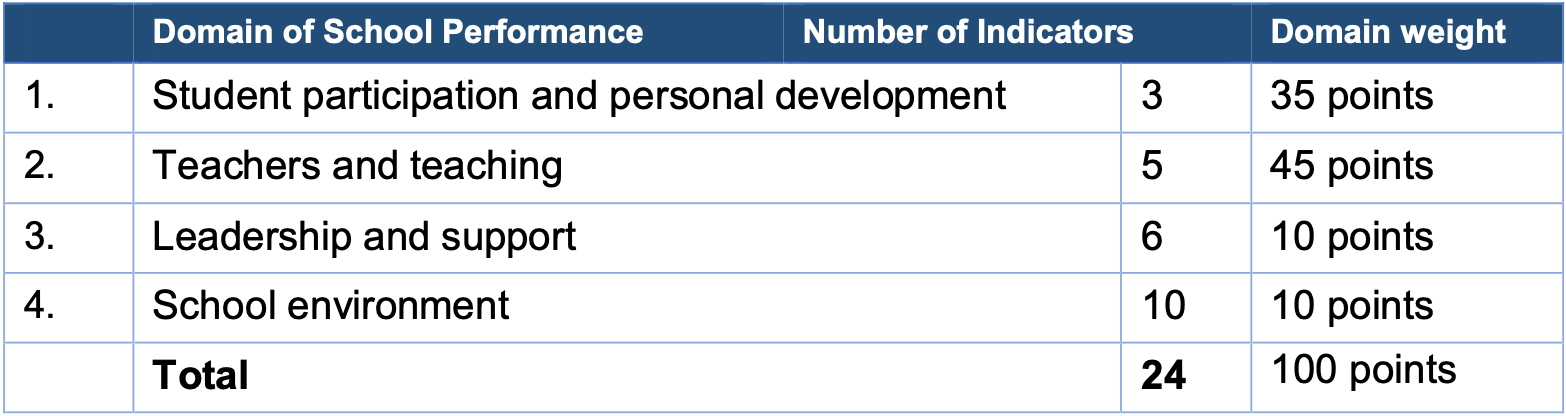

The PMIU in Punjab created the SIF in 2019-20 with the goal of improving the way data was collected and organized, with technical assistance from Cambridge Education under the DFID supported Punjab Education Support Programme (PESP). The aim was to prioritize the needs of schools and ensure that their performance was the main focus. The SIF operates on an iterative cycle of data collection, processing, and utilization, beginning at the school level, where data is regularly gathered on various indicators through established methods. To organise this data, the SIF organizes indicators into four domains of school performance and assigns each domain a weight based on its significance for the schools’ performance.

SIF Domains of School Performance, No. of Indicators, and Domain Weights in Punjab

Source: PMIU 2019

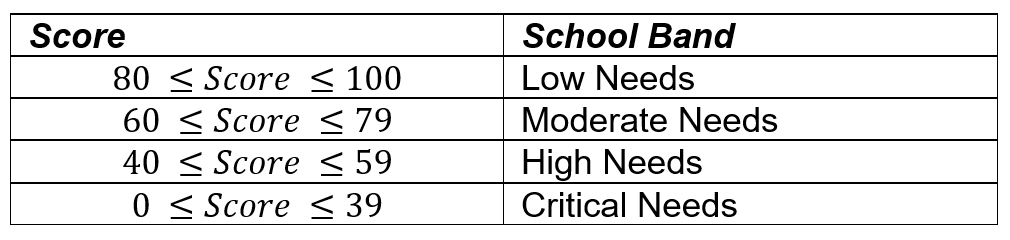

The collected data is then transmitted to the central module maintained by the Punjab Information Technology Board (PITB), where the School Status Index (SSI) of each school is calculated. The system categorizes the schools into various bands based on their School Status Index (SSI) score. The SSI score reflects the level of improvement required by the school. It is important to note that the SIF focuses on identifying the needs of schools rather than ranking their performance. This approach is aimed at avoiding the negative consequences that often result from ranking schools based on their performance.

School Status Index (SSI) Score and School Needs Categories

The data processed as SSI scores is then displayed at the dashboard available to all stakeholders. Based on the identified needs for each school, the system generates actions for officials at different tiers of the government. The system is designed to ensure that the actions needed to address the identified needs of the school are taken. The results of these actions are captured in the next iteration of the SIF cycle. If the SIF is functioning effectively, the number of schools in the high needs category should decrease over time through the repeated use of data to address their requirements. Thus, through the repeated calculation of the index for each school, the SIF can provide evidence of advancements and improvements in educational institutions.

But does it?

Scaling DSI innovations

The case of SIF: More is not always better

The Punjab SED launched the SIF throughout the province in February 2021. The introduction of SIF was met with widespread approval and agreement on its utilization. However, through its ongoing research, supported by the GPE Knowledge, Innovation & Exchange (KIX) initiative, the Society for Advancement of Education (SAHE) is exploring the opportunities and challenges in scaling innovations in data-driven school improvement, including the SIF in Punjab (as well as KP). Emerging findings from the research indicate challenges encountered during the scaling process at every stage of the SIF’s iterative cycle, and highlight the need for better coordination among various stakeholders, including school officials, sub-district and district officials, provincial education departments, and both national and international development partners to provide a more enabling scaling system for the SIF to thrive in. Unevenness in the implementation and use of the SIF at various levels has also shown that more is not necessarily better, and that optimal scaling of any innovation is a complex and ongoing process that involves not only its technical viability, but also balancing factors such as financial sustainability, equity, and the range of effects it has on those affected. Thus, the question of whether EMIS in Pakistan can help improve schools is met with an even more challenging response – that is, determining optimality in the scaled implementation of innovations that promote the use of EMIS for school-improvement.